換金率60%~

換金率60%~ 横浜市戸塚区なら「クレジットカード現金化」で現金ゲット!

この記事では横浜市戸塚区で現金を調達できる方法「クレジットカード現金化」について解説しています。消費者金融を利用せずとも、クレジットカード1枚あれば現金をゲットできますよ。お手持ちのクレジットカードを利用して現金不足を解消しよう!横浜市戸塚...

換金率60%~

換金率60%~  現金化の方法

現金化の方法  現金化の方法

現金化の方法  現金化の雑学

現金化の雑学  業者選び

業者選び  換金率60%~

換金率60%~  換金率60%~

換金率60%~  現金化の方法

現金化の方法  業者選び

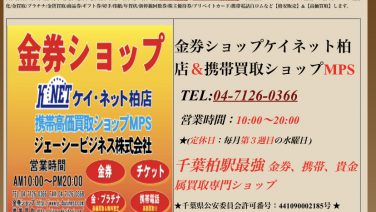

業者選び  業者選び

業者選び